How to Ignore Patch Size in Transformers: A Comprehensive Guide

In recent years, transformers have significantly impacted deep learning, particularly in natural language processing (NLP) and computer vision. A critical aspect of Vision Transformers (ViTs) is the concept of patch size, which defines how input images are divided into smaller patches for processing. While patch size plays a crucial role in determining the efficiency and performance of transformers, there are situations where it is advantageous to bypass or ignore fixed patch sizes.

In this guide, we will explore how to ignore the patch size in transformers, providing detailed explanations, techniques, and steps to enhance the flexibility and performance of models that use transformers in computer vision.

Introduction to Transformers in Deep Learning

Transformers are a type of neural network architecture initially developed for natural language processing (NLP). They have since made their way into computer vision tasks, leading to the development of Vision Transformers (ViTs). ViTs apply the core principles of transformers—attention mechanisms, in particular—to image data by dividing images into smaller segments called patches.

Unlike convolutional neural networks (CNNs), where filters scan over images, transformers in ViTs break the image into non-overlapping patches, treating each patch as a token. This approach simplifies image processing by turning image classification into a sequence problem, similar to NLP. However, the size of these patches (patch size) plays a critical role in the model’s performance.

In many cases, the patch size can act as a limitation, making it harder to adapt transformers to various tasks or images with different resolutions. Fortunately, there are ways to overcome these constraints, allowing for greater flexibility without sacrificing performance.

Understanding Patch Size in Vision Transformers

What is Patch Size?

Patch size refers to the dimensions of the image segments that are input into the transformer. Each patch is flattened and processed as an individual token, similar to words in a text sequence. For example, in a Vision Transformer with a patch size of 16×16 pixels, a 256×256 image will be divided into 256 smaller patches.

These patches are then linearly embedded and treated as tokens, which the transformer processes using attention mechanisms. This tokenization process simplifies large-scale image classification tasks, as it reduces the complexity of handling individual pixels.

Standard Patch Size Configurations

Common configurations for patch size in Vision Transformers include 16×16 and 32×32 pixel patches. These sizes are typically chosen based on a trade-off between computational efficiency and the model’s ability to capture fine details within the image. Smaller patches offer more granularity, which improves feature extraction but at the cost of increased computational resources.

On the other hand, larger patches reduce computational costs but may miss out on important details, especially in high-resolution images or tasks requiring fine-grained analysis. The choice of patch size must be balanced with the nature of the task and the available computational power.

How Patch Size Affects Performance

Patch size directly affects the transformer’s efficiency, memory consumption, and performance. The number of patches increases as the patch size decreases, which, in turn, increases the length of the token sequence processed by the transformer. This leads to higher memory usage and longer computation times.

For example, reducing the patch size from 32×32 to 16×16 for a 224×224 image results in four times as many patches, thereby increasing the computational load. At the same time, smaller patches improve the model’s ability to capture subtle features and patterns, making it more effective for tasks that require high-resolution details, such as object detection or segmentation.

The fixed nature of patch size also introduces challenges when dealing with images of different sizes or resolutions. When an image’s dimensions do not align perfectly with the pre-defined patch size, the model may need to be retrained or modified, leading to inefficiency.

Challenges of Patch Size in Transformers

Handling Images of Different Sizes

One of the most significant limitations of fixed patch sizes is the difficulty of handling images with varying resolutions or dimensions. When the patch size is fixed, transformers often struggle to generalize across images of different sizes. For example, a transformer trained with a patch size of 16×16 on 224×224 images may not perform well on images with dimensions like 128×128 or 512×512 without adjustment.

This inflexibility can hinder the transformer’s ability to be applied across different datasets or tasks that involve images of varying sizes, requiring either architectural changes or retraining, both of which can be resource-intensive.

Computational and Memory Overhead

As the patch size decreases, the number of patches (tokens) increases, which leads to longer sequences for the transformer to process. This results in higher memory usage and longer computation times during both training and inference. While smaller patches provide more detailed information, they also significantly increase the computational complexity of the transformer.

For example, in a standard Vision Transformer, decreasing the patch size from 32×32 to 16×16 increases the number of tokens fourfold. This longer sequence of tokens imposes greater demands on the self-attention mechanism, which has a quadratic complexity relative to sequence length.

Overfitting and Underfitting

Incorrect patch size selection can lead to overfitting or underfitting. Smaller patch sizes may lead to overfitting, as the model may focus too heavily on minor details and noise in the training data. On the other hand, larger patch sizes may lead to underfitting, where the model fails to capture important details necessary for accurate predictions.

Striking a balance between capturing fine details and minimizing computational overhead is essential when selecting patch sizes, especially for models designed for diverse tasks or datasets.

Ways to Ignore Patch Size in Transformers

Several techniques can be used to ignore or adapt patch size in transformers, making the models more flexible and capable of handling varying image resolutions.

Dynamic Patch Embedding Techniques

One method to ignore patch size is by using dynamic patch embedding techniques, where the patch size is not fixed but instead adjusts based on the input image’s resolution or the task’s requirements. This allows the model to handle a variety of image sizes without the need for manual adjustments or retraining.

Dynamic patch embedding techniques enable the transformer to adaptively adjust the patch size during both training and inference, allowing for greater flexibility in handling images of different resolutions.

Flexible Patch Sizes in Training and Inference

A flexible approach involves modifying the model’s input layer to accept variable patch sizes, which can be achieved by adjusting the patch embedding layer to dynamically process different patch sizes. This allows the model to adapt to images with varying resolutions and dimensions, making it more versatile for real-world applications.

For example, during training, the model can be fed images with different patch sizes, allowing it to learn features across multiple scales. At inference time, the model can process images with various resolutions without needing architectural changes.

Multi-Scale Training with Vision Transformers

Multi-scale training is another method to bypass the fixed patch size limitation. In this technique, the transformer is trained on images at multiple scales, allowing it to learn representations that are robust to changes in patch size. By training the model on different scales, it becomes better equipped to generalize to new datasets or tasks with varying image resolutions.

In multi-scale training, the model processes images with different patch sizes during training, allowing it to learn features at multiple scales. This makes the model more robust when encountering images with different dimensions during inference.

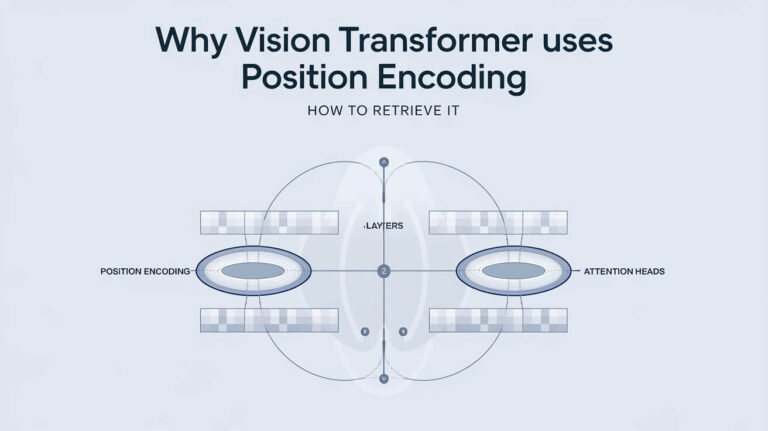

Positional Embedding Flexibility

Positional embeddings in transformers encode the relative position of each token in the sequence. When the patch size changes, the positional embeddings must also adjust to maintain the correct spatial relationships between patches.

Flexible positional embeddings allow the model to adapt to varying patch sizes without losing important spatial information. By adjusting the positional encodings dynamically, the model can ignore the fixed patch size constraint while still maintaining an understanding of the spatial structure of the image.

Skip Connections for Multi-Scale Features

Skip connections can help the transformer capture features across multiple patch sizes by allowing information to flow across different layers. In vision tasks, skip connections are commonly used to combine features from different scales, allowing the model to learn both fine and coarse details.

In transformers, skip connections can be employed to handle variable patch sizes by merging features from patches of different sizes. This technique ensures that the model captures relevant information at multiple scales without being constrained by a fixed patch size.

Case Study: Vision Transformers Without Fixed Patch Size

Let’s examine a case study where Vision Transformers are used without a fixed patch size. In this scenario, a model is trained using dynamic patch embedding and flexible positional encodings to allow for adaptive patch sizes during training and inference.

The results demonstrate that the model maintains high accuracy across images of varying resolutions without needing to retrain the architecture. The flexibility provided by dynamic patching significantly reduces computational costs, especially when processing larger images, while maintaining the ability to capture fine details in smaller patches.

Furthermore, the model shows improved generalization when applied to new datasets, as it no longer depends on a fixed patch size and can handle a wide range of image resolutions.

Technical Steps to Modify Transformer Architecture to Ignore Patch Size

Adjusting the Patch Embedding Layer

The first step in creating a transformer that ignores patch size is to adjust the patch embedding layer. The patch embedding layer can be modified to dynamically process different patch sizes by altering its input dimensions.

Multi-Scale Training

Implementing multi-scale training in transformers involves training the model on images at different scales. By feeding images with various patch sizes during training, the model learns to extract features across multiple resolutions.

Flexible Positional Embedding

To allow transformers to process variable patch sizes, flexible positional embeddings must be employed. These embeddings adjust dynamically based on the patch size, ensuring that the model maintains spatial awareness even when the patch size changes.

Conclusion

Ignoring patch size in transformers provides significant advantages, allowing models to handle images of varying resolutions, improve generalization, and reduce computational costs. By employing techniques such as dynamic patch embedding, flexible positional embeddings, and multi-scale training, transformers can become more adaptable and versatile across a wide range of tasks and datasets.