Why Vision Transformer Using Position Encoding & How to Retrieve It

Vision Transformers (ViT) have revolutionized the field of computer vision, especially in tasks like image classification and retrieval. While transformers were initially designed for natural language processing, their application to images has proven effective in understanding visual data. A crucial aspect that enables this success is positional encoding, which reintroduces spatial information to transformers, making them adept at handling images. This blog explores why Vision Transformers use positional encoding and how it aids in image retrieval, offering a detailed look at the mechanisms and benefits.

What Are Vision Transformers (ViT)?

The Transition from CNNs to Vision Transformers

For a long time, Convolutional Neural Networks (CNNs) dominated image recognition tasks. CNNs were highly effective at learning local patterns in images, thanks to their hierarchical structure that captures details in successive layers. However, CNNs have limitations in capturing global dependencies—the relationships between distant pixels in an image.

This is where Vision Transformers come in. Unlike CNNs, ViTs can model global dependencies effectively because of their attention-based architecture. The key difference is that transformers don’t inherently understand the order of inputs (whether they are words in a sentence or pixels in an image). This is where positional encoding becomes crucial, as it adds the missing spatial structure needed for image-based tasks.

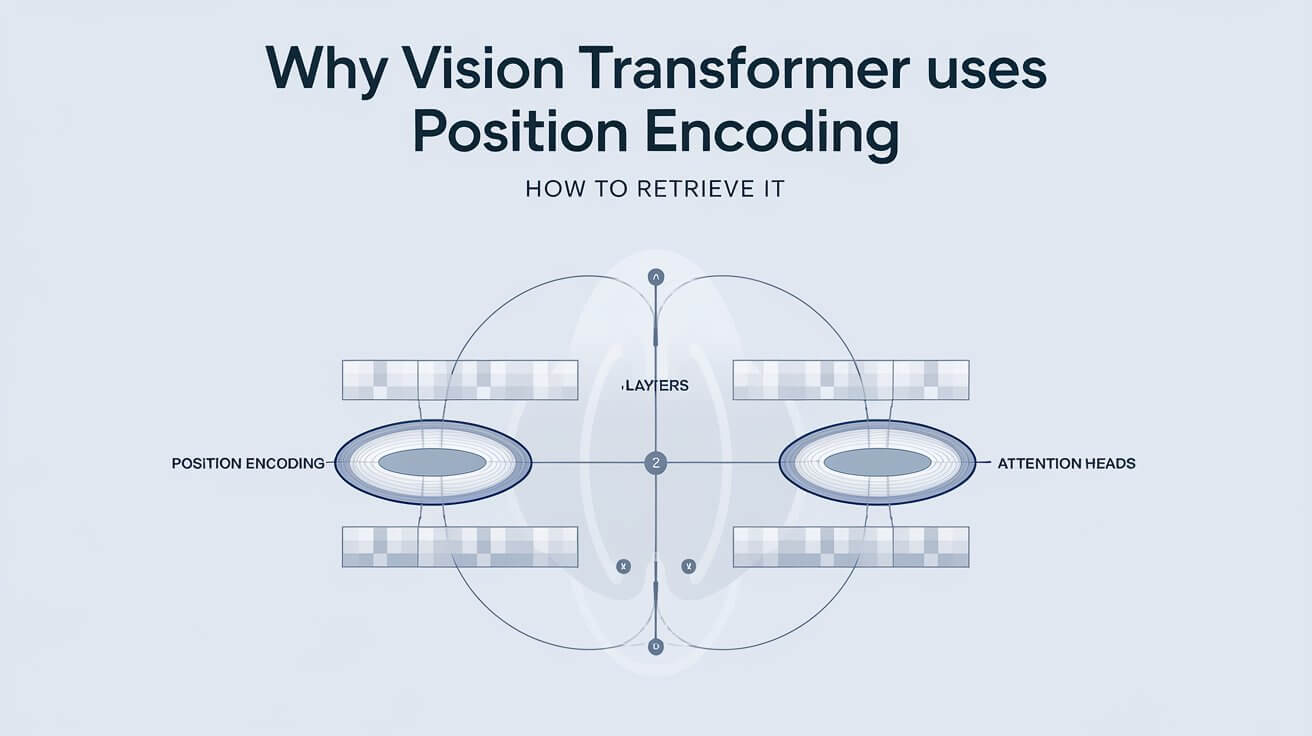

Core Components of Vision Transformers

Vision Transformers consist of layers designed to handle image data in a unique way. Instead of scanning the entire image pixel by pixel like CNNs, ViT divides the image into smaller, fixed-sized patches (typically 16×16 or 32×32 pixels). Each patch is then linearly projected into a vector, known as a token, much like how words are converted into embeddings in NLP transformers.

Once the image is tokenized, the tokens are passed through several transformer layers. Each transformer layer has two major components:

- Multi-Headed Self Attention (MSA): This allows the model to focus on different parts of the image simultaneously, calculating how much attention one patch should pay to another.

- Feed-Forward Networks (FFN): This is applied to each token separately after the attention operation, enabling the model to combine information from different tokens.

This patch-token approach enables the transformer to analyze the image holistically, considering both local details and global context.

Role of Positional Encoding in Vision Transformers

Transformers, by design, are position-invariant. This means that without external help, they don’t know the relative positions of image patches. In tasks where the order of elements matters—like in images, where the position of an object is essential—positional encoding becomes necessary. Positional encoding adds extra information to each token, allowing the model to distinguish between patches based on their location in the original image.

By embedding positional information into the tokens, ViTs can maintain the structural integrity of the image, which is crucial for tasks like image retrieval where spatial relationships between objects significantly influence the model’s ability to find similar images.

Positional Encoding in Vision Transformers

Absolute vs. Relative Positional Encoding

There are two primary types of positional encoding: absolute and relative.

- Absolute positional encoding assigns a unique positional vector to each patch based on its fixed position in the image. This works well when the model needs to know the exact location of objects.

- Relative positional encoding focuses on the relationships between patches, emphasizing the distance between patches rather than their fixed locations. This allows the model to generalize better across different images where objects may appear at varying positions.

Sine-Cosine Positional Encodings

One common method of implementing absolute positional encoding is through sine and cosine functions. These functions provide a smooth way to represent positional information, allowing the model to easily interpolate positional encodings for patches that fall between those it has seen during training. This method is computationally efficient and offers a scalable way to represent positions in large images.

The key advantage of sine-cosine encodings is that they provide a fixed and continuous way to represent positional data, making it easier for the model to learn relationships between patches across the entire image.

Conditional Positional Encoding (CPE) for Vision Transformers

Another innovative approach is Conditional Positional Encoding (CPE). Unlike fixed positional encodings, CPE adapts the encoding based on the context of the surrounding patches. This makes the encoding more flexible and helps the model dynamically adjust its understanding of spatial relationships as it processes more information from the image.

CPE enhances the model’s performance by making positional encoding less rigid and more adaptable to different types of images, especially in tasks like image retrieval where object positions can vary significantly between queries.

How Positional Encoding Helps Image Retrieval in Vision Transformers

Embedding Spatial Information in Image Features

In image retrieval tasks, the goal is to find images from a database that are visually similar to a query image. Positional encoding plays a key role by embedding spatial information into the feature vectors generated from the image patches. This allows the model to not only focus on object features but also understand where those objects are located within the image.

For instance, consider an image of a dog in front of a house. Positional encoding helps the model understand that the dog’s location relative to the house is important for recognizing similar images where the dog and house are in similar spatial arrangements.

The Process of Image Retrieval in ViT

The retrieval process in Vision Transformers typically follows these steps:

- Preprocessing: The input image is split into patches, and each patch is embedded with its positional encoding.

- Feature Extraction: The transformer layers process the patches and their positional encodings, learning important features.

- Embedding Generation: The output tokens, enriched with both feature and positional information, are used to generate global descriptors for the image.

- Query Matching: During retrieval, the query image is transformed into a global descriptor and compared to the descriptors of images in the database. Positional encoding helps the model find matches not only based on appearance but also based on spatial layout.

By using both visual features and spatial relationships, Vision Transformers excel at finding images that are similar both in content and structure.

Retrieval Performance in Vision Transformers vs. CNNs

When comparing Vision Transformers to traditional CNNs in terms of image retrieval performance, ViTs generally outperform CNNs on large, complex datasets. This is because ViTs, equipped with positional encoding, handle both local and global patterns better than CNNs, which are limited to capturing local dependencies through their convolutional layers.

Moreover, the self-attention mechanism in ViTs, combined with positional encoding, allows them to consider the entire image at once, improving their ability to retrieve images with subtle differences in layout or object position.

Optimization of Positional Encoding for Better Image Retrieval

Fine-Tuning Positional Encoding for Specific Datasets

While fixed positional encoding works well in general scenarios, fine-tuning the encoding for specific datasets can lead to better performance. For example, images from medical datasets often have unique spatial layouts compared to natural scenes. In such cases, learnable positional encodings can be trained to adapt to the particular spatial structures found in these specialized datasets, improving retrieval accuracy.

Trade-offs Between Absolute and Relative Encodings

There are trade-offs when choosing between absolute and relative positional encodings.

- Absolute encoding is more suited for tasks where the exact position of objects is important, such as document analysis or satellite imagery.

- Relative encoding offers more flexibility for general image retrieval tasks, where objects may appear in different parts of the image across different examples.

In real-world applications, the choice between absolute and relative encoding depends on the nature of the image data and the retrieval task at hand. For instance, in artwork retrieval, relative encoding might be more useful as the position of objects in the painting can vary widely without changing the overall meaning of the image.

Challenges and Future Directions for Vision Transformers and Positional Encoding

Limitations of Current Positional Encoding Techniques

Despite their success, current positional encoding techniques have limitations, particularly when it comes to scaling up to high-resolution images. As images become larger and more complex, the fixed positional encodings used by most ViTs may not be sufficient to capture all the necessary spatial relationships.

Additionally, positional encoding adds computational overhead, especially when processing long sequences of image patches. This can slow down the model’s performance, making it less efficient for real-time applications.

New Advances in Positional Encoding

Researchers are working on dynamic positional encoding techniques that adjust to the content of the image rather than relying on fixed encodings. These approaches aim to make positional encoding more adaptable and reduce the computational burden by only encoding the most relevant spatial information.

Another promising direction is the combination of CNN-based feature extraction with transformer models, leveraging the strengths of both architectures. This hybrid approach allows transformers to benefit from the spatial locality captured by CNNs while still utilizing the global attention capabilities of the transformer architecture.

Conclusion: Why Positional Encoding is Crucial for Vision Transformers

In summary, positional encoding is a vital component of Vision Transformers, enabling them to process images while preserving their spatial structure. By embedding location information into image patches, positional encoding allows ViTs to excel at tasks like image retrieval, where understanding the spatial arrangement of objects is just as important as recognizing the objects themselves.

As positional encoding techniques continue to evolve, we can expect Vision Transformers to become even more powerful in handling complex visual tasks, from medical image analysis to large-scale visual search engines. The future of computer vision will undoubtedly be shaped by these advancements, pushing the boundaries of what is possible with image recognition and retrieval.